Motion detection is a captivating field that opens up a world of possibilities in computer vision. If you’re eager to explore the realm of motion detection using OpenCV in Python, you’ve come to the right place. This blog is designed to walk you through the process, whether you’re a beginner or an experienced developer. With the power of OpenCV at your fingertips, you’ll be equipped to detect and track motion in real-time video streams or pre-recorded videos. Get ready to unravel the intricacies of motion detection as we embark on this enlightening journey together!

What is Motion Detection?

Before diving into the “how” of motion detection, let’s take a moment to understand the “what”. Motion detection, in simple terms, is the process of identifying and tracking changes in the position of objects within a video or image sequence. It allows us to detect when something moves in a given frame and can be incredibly valuable in various fields.

Imagine a security camera capturing video footage of a room. Motion detection algorithms can analyze each frame and pinpoint any instances where movement occurs. This ability to detect motion forms the foundation for applications like surveillance systems, object tracking, and video analysis.

The primary goal of motion detection is to identify regions in a video where significant changes have occurred compared to a reference frame. These changes can include objects entering or exiting the scene, objects changing positions, or even subtle alterations such as a flickering light. By pinpointing these areas of motion, we can analyze and respond to events of interest in real time or during post-processing.

Motion Detection OpenCV Python Demo

Applications of Motion Detection

Motion detection has a wide range of applications across various industries and fields. Let’s explore some of the key areas where motion detection plays a crucial role:

- Surveillance Systems and Security: Motion detection is used to monitor and secure environments, detecting suspicious movements and unauthorized activities.

- Object Tracking and Activity Recognition: Motion detection helps track moving objects, enabling analysis of their behavior and patterns in domains such as traffic monitoring and sports analysis.

- Video Analysis and Anomaly Detection: Motion detection assists in extracting meaningful information from videos, identifying regions of interest, and detecting anomalies in crowd monitoring and industrial quality control.

- Human-Computer Interaction: Motion detection enables gesture recognition, revolutionizing interactions with technology through hand or body movements, seen in gaming consoles and virtual reality experiences.

- Automation and Robotics: Motion detection helps robots navigate their surroundings, avoid obstacles, and interact with objects in manufacturing, logistics, and healthcare industries.

- Energy Conservation and Smart Environments: Motion detection controls lighting and HVAC systems based on occupancy, conserving energy and automating smart homes and buildings.

Motion Detection Techniques

When it comes to motion detection using OpenCV in Python, several techniques can be employed to detect and track movement in video streams or recorded videos. Let’s explore some of these techniques below:

- Frame Differencing: Frame differencing compares consecutive frames, highlighting areas with significant change. By thresholding the difference image, we can isolate moving objects.

- Background Subtraction: Background subtraction creates a background model and subtracts it from subsequent frames to detect the foreground objects, indicating motion.

- Noise Reduction: Techniques like Gaussian blurring help reduce noise and smoothen difference images, improving the accuracy of motion detection.

- Contour Detection: Contours identify the boundaries of objects within regions of motion, enabling us to extract individual objects for further analysis.

- Object Tracking: Object tracking involves monitoring the movement of specific objects over time. Centroid tracking tracks the centroid of objects, providing position and trajectory information.

Setting Up Your Environment

Before we jump into motion detection with OpenCV and Python, let’s ensure our environment is ready for action.

Installing OpenCV and Python

To begin, make sure you have Python installed on your system. You can download the latest version from the official Python website (https://www.python.org/downloads/).

Once Python is installed, we need to install OpenCV, a powerful computer vision library, using the following command:

pip install opencv-pythonAdditionally, we may need to install other libraries like NumPy for efficient numerical computations and Matplotlib for visualization.

Importing Required Libraries

Once the installations are complete, let’s import the necessary libraries in our Python script:

import cv2

import numpy as np

import matplotlib.pyplot as pltCapturing Video and Image Frames

Before we start detecting motion, we need to obtain frames from video sources or images. OpenCV provides convenient functions for this purpose.

To process video files or streams, we use OpenCV’s VideoCapture class. It allows us to access video sources, such as webcams or pre-recorded videos.

Once we have access to a video source, we can use the read() method to obtain frames.

Learn in depth how to Capture Video and Read Frames in OpenCV Python.

Motion Detection Algorithm OpenCV Python

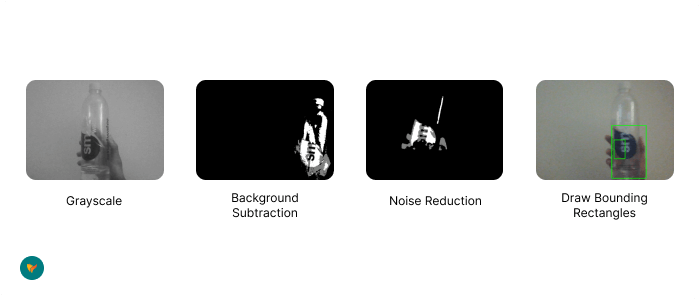

To detect motion with OpenCV and Python, you can use the following steps:

- Capture the video stream using a camera or a video file.

- Convert each frame of the video stream to grayscale.

- Apply a background subtraction algorithm to detect the regions where motion is occurring.

- Apply morphological operations to reduce noise and fill gaps in the detected regions.

- Draw bounding boxes around the detected regions to visualize the motion.

Let’s dive into each step in more detail.

Step 1: Capture the Video Stream

The first step in motion detection is to capture the video stream using OpenCV’s VideoCapture function.

This function allows you to connect to a camera or to read a video file.

Here’s an example:

import cv2

cap = cv2.VideoCapture(0) # Connect to the default camera

while True:

ret, frame = cap.read() # Read a frame from the video stream

# Display the frame

cv2.imshow('Video Stream', frame)

if cv2.waitKey(1) == ord('q'): # Exit if the 'q' key is pressed

break

cap.release() # Release the camera

cv2.destroyAllWindows() # Close all windowsIn this example, we’re connecting to the default camera (0) and reading frames from the video stream in a loop.

We’re also displaying each frame in a window using the imshow function. Finally, we’re checking if the ‘q’ key is pressed to exit the loop.

Step 2: Convert the Frames to Grayscale

The next step is to convert each frame of the video stream to grayscale.

This is because color information is not necessary for motion detection, and grayscale images are easier to process.

Here’s an example:

import cv2

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

# Convert the frame to grayscale

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Display the grayscale frame

cv2.imshow('Grayscale Frame', gray)

if cv2.waitKey(1) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()In this example, we’re using the cvtColor function to convert the BGR (Blue, Green, Red) color image to a grayscale image. We’re also displaying the grayscale image in a window.

Step 3: Apply Background Subtraction

The next step is to apply a background subtraction algorithm to detect the regions where motion is occurring.

The basic idea behind background subtraction is to subtract the current frame from the previous frames to obtain a difference between images, which highlights the regions where motion has occurred.

There are several background subtraction algorithms available in OpenCV, including MOG2 and KNN. In this tutorial, we’ll use the MOG2 algorithm.

Here’s an example:

import cv2

cap = cv2.VideoCapture(0)

# Create the MOG2 background subtractor object

mog = cv2.createBackgroundSubtractorMOG2()

while True:

ret, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Apply background subtraction

fgmask = mog.apply(gray)

# Display the foreground mask

cv2.imshow('Foreground Mask', fgmask)

if cv2.waitKey(1) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()In this example, we’re creating a MOG2 background subtractor object using the createBackgroundSubtractorMOG2 function.

We’re then applying the background subtraction to the grayscale frame using the apply function. Finally, we’re displaying the foreground mask in a window.

Step 4: Apply Morphological Operations

The foreground mask obtained from background subtraction may contain noise and gaps.

To reduce the noise and fill the gaps, we can apply morphological operations such as erosion and dilation.

Here’s an example:

import cv2

cap = cv2.VideoCapture(0)

mog = cv2.createBackgroundSubtractorMOG2()

while True:

ret, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

fgmask = mog.apply(gray)

# Apply morphological operations to reduce noise and fill gaps

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (5, 5))

fgmask = cv2.erode(fgmask, kernel, iterations=1)

fgmask = cv2.dilate(fgmask, kernel, iterations=1)

cv2.imshow('Foreground Mask', fgmask)

if cv2.waitKey(1) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()In this example, we’re using the getStructuringElement function to create an elliptical kernel for erosion and dilation.

We are then applying erosion and dilation to the foreground mask using the erode and dilate functions.

Step 5: Draw Bounding Boxes

The final step is to draw bounding boxes around the detected regions to visualize the motion. We can use the findContours function to find the contours (i.e., the boundary curves) of the connected components in the foreground mask.

We can then use the boundingRect function to compute the bounding box of each contour. Here’s an example:

import cv2

cap = cv2.VideoCapture(0)

mog = cv2.createBackgroundSubtractorMOG2()

while True:

ret, frame = cap.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

fgmask = mog.apply(gray)

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (5, 5))

fgmask = cv2.erode(fgmask, kernel, iterations=1)

fgmask = cv2.dilate(fgmask, kernel, iterations=1)

contours, hierarchy = cv2.findContours(fgmask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for contour in contours:

# Ignore small contours

if cv2.contourArea(contour) < 1000:

continue

# Draw bounding box around contour

x, y, w, h = cv2.boundingRect(contour)

cv2.rectangle(frame, (x, y), (x+w, y+h), (0, 255, 0), 2)

cv2.imshow('Motion Detection', frame)

if cv2.waitKey(1) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()In this example, we’re iterating over all the contours found in the foreground mask using a for loop. We’re ignoring small contours (i.e., those with an area less than 1000 pixels) using the contourArea function.

For the remaining contours, we’re computing the bounding box using the boundingRect function and drawing it on the original frame using the rectangle function.

Output

Tips and Tricks for Optimal Motion Detection

To achieve optimal motion detection results, consider the following tips and tricks:

- Choosing the Right Threshold Values: Experiment with different threshold values to achieve the desired sensitivity for motion detection. Too low, and you may capture unwanted noise. Too high, and you may miss subtle movements.

- Handling Lighting Conditions: Changes in lighting conditions can affect motion detection accuracy. Consider techniques such as background modeling or adaptive thresholding to handle varying illumination.

- Dealing with Shadows: Shadows can interfere with motion detection. Preprocessing techniques like color-based segmentation or morphological operations can help mitigate shadow-related challenges.

Wrapping Up

Congratulations! You’ve now mastered the art of motion detection with OpenCV and Python. We covered the fundamental concepts, explored different techniques, and implemented motion detection algorithms. Remember to experiment, have fun, and keep honing your skills in computer vision.

Now it’s your turn! Put your newfound knowledge to use and create your motion detection projects. Feel free to share your experiences, challenges, and innovative ideas in the comments section below.

Frequently Asked Questions (FAQs)

Motion detection in OpenCV refers to the process of identifying and tracking changes in the position of objects within a video sequence using the OpenCV library in Python.

Motion detection typically involves techniques like frame differencing or background subtraction. Frame differencing compares consecutive frames to identify areas with significant change, while background subtraction creates a background model and subtracts it from subsequent frames to isolate moving objects.

Absolutely! OpenCV provides functions and algorithms that enable real-time motion detection. By leveraging techniques like frame differencing or background subtraction, you can detect and track motion in video streams captured by webcams or other video sources.

Yes, OpenCV allows you to apply motion detection techniques to pre-recorded videos as well. You can read video files using the VideoCapture object and process each frame to detect motion using frame differencing or background subtraction algorithms.

Absolutely! Motion detection can be combined with other computer vision tasks like object detection, tracking, or recognition. By integrating multiple techniques, you can create powerful systems for various applications, such as intelligent surveillance or video analytics.

What’s Next?

Ready to explore more in computer vision? Here are some related blogs to delve into:

- Lane Detection with OpenCV and Python

- Face Recognition System in Python

- Shape Detection with OpenCV and Python

- Blob Detection with OpenCV and Python

Expand your skills and knowledge by exploring these exciting topics. Happy coding!

how can i save video only when motion is detected

im having problem when i import matplotlib. it says:

ImportError: DLL load failed while importing _path: The specified module could not be foundhello my friend!

how should i select just one part of all ?