Are you looking to handle large datasets efficiently in Python? Enter the world of Python generators! They allow you to generate data on the fly, overcoming memory limitations. In this blog, we’ll explore everything about the Python generator, from basics to advanced usage. By the end, you’ll be equipped with the knowledge to supercharge your code. Let’s dive in and uncover the magic of Python generators! Oh and also don’t forget to read the fun fact about Python Generator at the end.

Understanding The Problem

Before diving into the intricacies of Python generators, let’s first understand the reason behind learning about them. Python generators come to the rescue in scenarios where memory limitations or processing large datasets poses a hurdle.

Consider a situation where you need to handle a massive dataset that cannot fit entirely into memory. This predicament can lead to memory errors and inefficiencies. Python generators offer an elegant solution by generating values on the fly, conserving memory, and improving performance.

Additionally, generators are invaluable when working with sequences and streams of data. By iterating over values one at a time, they enable efficient processing and eliminate the need to load and process entire datasets at once.

By understanding the problems that Python generator solves – such as memory constraints and data handling challenges – we can appreciate its significance. Generators empower us to work efficiently, tackle complex tasks, and write cleaner, more readable code.

Now, let’s embark on our exploration of Python generators, uncovering the magic that simplifies our coding experiences.

What is a Python Generator?

A Generator in Python is a special function that allows value generation on the fly, rather than storing them all in memory. They use the “yield” keyword to pause execution and yield a value. Generators are also used to create iterators as they return the traversal object.

Generators are memory-efficient and improve performance by generating values as needed. They are a valuable tool for working with large datasets and writing efficient, readable code.

Python Generator Syntax

In Python, generators can be created using two different syntaxes: Generator Functions and Generator Expressions. Let’s explore both of these syntaxes in detail.

Generator Function

A generator function is defined as a normal function using the def keyword, followed by the function name. But inside the function, instead of using the return keyword, we use the yield keyword. This is what makes the function a generator.

The yield statement temporarily pauses the function’s execution and yields a value to the caller.

Here’s an example of the syntax for a generator function:

def function_name():

yield value

gen = function_name()

for i in gen:

print(i)In this syntax, we define a generator function called function_name(). Inside the function, we use the yield statement to yield a value. When we call the generator function, it returns a generator object. We can then iterate over the generator object using a for loop or extract values using the next() function.

Generator Expression

Generator expressions provide a concise way to create generators in a single line. They have a similar syntax to list comprehensions, but instead of using square brackets, we enclose the expression within parentheses.

Here’s an example of the syntax for a generator expression:

gen = (expression for variable in iterable if condition)

for i in gen:

print(i)In this syntax, we create a generator expression by enclosing the expression within parentheses. We can include an optional if condition to filter the values produced by the generator.

Now that we have understood the syntax of both types of generator, let’s move on to how a generator work in Python.

How does Python Generator work?

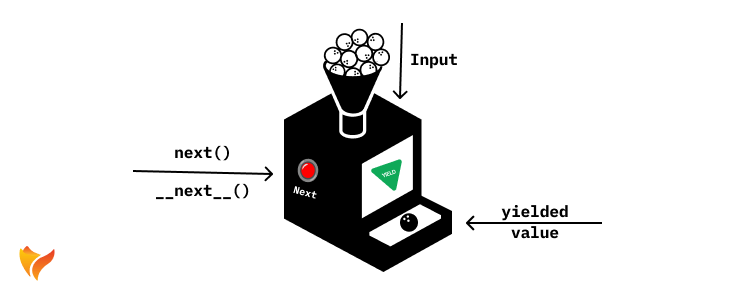

Now that we have covered the basics of Python generators, let’s dive deeper into understanding how they actually work. To grasp the concept more effectively, let’s imagine a fun and interactive scenario.

Imagine a generator as a fascinating machine with a funnel-shaped container filled with balls. Each ball represents a value that the generator can produce. When we interact with the generator by calling the next() function, it’s like pressing a button on the machine, which dispenses a ball for us to use.

In code, we define a generator function using the def keyword, just like any other function. However, instead of using the return statement to send back a value, we use the yield keyword. The yield statement temporarily suspends the function’s execution and returns a value to the caller.

Let’s take a look at a simple example:

def number_generator(n):

for i in range(n):

yield i

# Usage:

gen = number_generator(5)

print(next(gen)) # Output: 0

print(next(gen)) # Output: 1

print(next(gen)) # Output: 2

print(next(gen)) # Output: 3

print(next(gen)) # Output: 4In this example, we have a generator function called number_generator that yields numbers from 0 to n-1. When we call next(gen), the generator starts executing until it encounters the yield statement. At this point, it pauses its execution and yields the value of i. The execution state of the generator is preserved, allowing us to continue from where we left off when we call next() again.

📝 Note: Generators only produce values when requested. This lazy evaluation approach contributes to their efficiency, especially when working with large datasets.

Generator Function vs Expression

While learning the syntax, we saw that there are two types of generators we can create: Generator Functions and Generator Expressions. But we didn’t dive into the details of how these two differ from each other. Let’s explore the distinctions between Generator Functions and Generator Expressions in this section.

| Feature | Generator Function | Generator Expression |

|---|---|---|

| Definition | Defined as a function using the def keyword | Defined as an expression within parentheses ( ) |

| Syntax | Uses the yield keyword to yield values | Similar to list comprehensions, but with parentheses ( ) instead of brackets [ ] |

| Memory Usage | Efficient memory usage, as values are generated on-the-fly | Efficient memory usage, similar to generator functions |

| Iteration Control | Control over the iteration process using yield statements | No explicit control over the iteration process |

| Function-like Features | Can accept parameters and have complex logic within the function | Not as flexible as generator functions, limited to expressions |

| Readability | Suitable for complex logic and multiple steps | Concise and compact, ideal for simple transformations |

| Use Cases | Complex data generation, custom sequence generation | Simple transformations, one-line operations |

Python Generators StopIteration Exception

When working with Python generators, it’s essential to understand the behavior of the StopIteration exception. The StopIteration exception is raised when a generator has exhausted all its generated values and there are no more items to yield. It serves as a signal to indicate the end of the iteration.

The StopIteration exception plays a crucial role in for loops and other iterator-consuming constructs. When a generator raises StopIteration, it informs the loop to exit and ensures that the program doesn’t continue trying to fetch values that don’t exist.

To demonstrate this, let’s consider a simple example using a generator function:

def countdown_generator(n):

while n > 0:

yield n

n -= 1

countdown = countdown_generator(5)

for num in countdown:

print(num)In this example, the countdown_generator function generates a countdown sequence from a given number down to 1. The for loop iterates over the generator and prints each value. However, behind the scenes, the for loop handles the StopIteration exception and terminates the loop when the generator is exhausted.

Output

5

4

3

2

1📝 Note: The StopIteration exception is automatically handled by the loop construct, so you don’t need to explicitly catch it.

However, if you’re manually iterating over a generator using the next() function or __next__() method, you need to be aware of the StopIteration exception. When there are no more values to yield, calling next() or __next__() on the generator will raise StopIteration. To handle this, you can use a try-except block to catch the exception and handle it accordingly.

countdown = countdown_generator(5)

while True:

try:

print(f"Using next(): {next(countdown)}")

print(f"Using __next__(): {countdown.__next__()}")

except StopIteration:

print("Countdown finished!")

breakIn this example, we manually iterate over the countdown generator using the next() function and __next__() method within a while loop. We catch the StopIteration exception and print a message to indicate that the countdown has finished.

Output:

Using next(): 5

Using __next__(): 4

Using next(): 3

Using __next__(): 2

Using next(): 1

Countdown finished!Yield vs Return

Our understanding of generators would be incomplete without exploring the distinction between the “yield” and “return” statements. Both of these statements play a crucial role in the behavior of generator functions. Let’s dive into the key differences between them:

| Feature | Yield | Return |

|---|---|---|

| Purpose | Produces a value and suspends execution temporarily | Terminates the function and returns a value |

| Usage | Used within generator functions | Used in any function or method |

| Execution | Resumes from the last yield statement | Terminates the function immediately |

| Continuation | Function retains its state for subsequent invocations | Function does not retain its state |

| Multiple Values | Can be used multiple times in a generator | The function does not retain its state |

| Control Flow | The function does not retain its state | Returns a value and exits the function |

| Iteration | Automatically iterates over yielded values | Does not provide automatic iteration |

📝 Note: While “yield” and “return” have distinct functionalities, they can also be used together in certain scenarios. This allows us to combine the benefits of both statements in a generator function, yielding values on demand and ultimately terminating the function when necessary.

Sending Data to Python Generator

Now that we have explored the concept of Python generators, you might be under the impression that generators only generate values on the fly. But hold on, there’s more to it! Would you believe me if I told you that generators can also receive values? Yes, it’s true! In addition to yielding values, generators have the ability to accept input from the caller. Let’s dive into how this works.

To send values into a generator, we use the send() method. This method allows us to communicate with the generator and pass values to it during its execution. Let’s take a look at an example to make things clearer:

def square_generator():

x = yield

yield x ** 2

# Creating a generator instance

gen = square_generator()

# Initializing the generator

next(gen)

# Sending values into the generator

result = gen.send(5) # Sends 5 to the generator and stores the squared number

print(result) # Output: 25In the example above, we define a generator function called square_generator(). Within the function, we use the yield statement to receive values sent by the caller. Notice that we have two yield statements: one to receive the value and another to yield the squared value back to the caller.

To send values into the generator, we first need to initialize it by calling next(gen). This allows the generator to reach its first yield statement and pause its execution. Then, we use the send() method to send a value to the generator. In our example, we send the value 5. The generator receives this value and performs the necessary computation, and the result is stored in the result variable.

📝 Note: The first call to next(gen) is required to initialize the generator before using send(). Additionally, when using send(), the value is received at the yield statement on the left-hand side, so make sure to yield the appropriate value to capture it.

Python Generator Pipelining and Chaining

Now that we have gained a solid understanding of Python generators, let’s take our knowledge one step further. How about creating a pipeline or chain of generators? The idea itself sounds exciting, doesn’t it? Well, let’s dive in and explore the generator pipeline. and chaining

A generator pipeline refers to the process of connecting multiple generators together in a sequential manner. Each generator in the pipeline takes the output of the previous generator as its input and produces its own set of values. This allows us to perform a series of transformations or computations on a stream of data efficiently.

To illustrate this concept, let’s consider an example. Imagine we have a list of numbers, and we want to apply a series of transformations: filtering out odd numbers, squaring the remaining numbers, and then summing them up. We can achieve this using a generator pipeline.

Here’s how we can implement it:

def numbers():

for num in range(1, 7):

print(f"Number: {num}")

yield num

def filter_odd(numbers):

for num in numbers:

if num % 2 == 0:

print(f"Even")

yield num

def square(numbers):

for num in numbers:

print(f"Square: {num ** 2}")

yield num ** 2

def sum_numbers(numbers):

total = 0

for num in numbers:

total += num

print(f"Total: {total}")

yield total

# Creating the generator pipeline

pipeline = sum_numbers(square(filter_odd(numbers())))

# Consuming the final result

result = next(pipeline)

print(result) # Output: 56In the example above, we first define individual generator functions (numbers, filter_odd, square, and sum_numbers). Each generator takes the output of the previous generator as input and applies a specific transformation.

By chaining these generators together using the yield statement, we create a pipeline that sequentially processes the data. In the end, we obtain the desired result by consuming the final generator in the pipeline.

Illustration

Let’s understand the Python Generator Pipeline and Chaining with the help of an image.

Output:

Number: 1

Number: 2

Even

Square: 4

Total: 4

Number: 3

Number: 4

Even

Square: 16

Total: 20

Number: 5

Number: 6

Even

Square: 36

Total: 56

56Call Sub-Generator Using Yield From

In Python, we can also use the yield from syntax to chain generators or call a sub-generator from within a generator. This allows us to delegate the iteration control to the sub-generator and retrieve its yielded values directly. Here’s an example:

def sub_generator():

yield 'Hello'

yield 'from'

yield 'sub-generator!'

def main_generator():

yield 'This is the main generator.'

yield from sub_generator()

yield 'Continuing with the main generator.'

# Usage:

for item in main_generator():

print(item)The output will be:

This is the main generator.

Hello

from

sub-generator!

Continuing with the main generator.The yield from syntax simplifies the code structure and makes it more modular and readable. It allows us to seamlessly integrate the values yielded by the sub-generator into the main generator’s output.

📝 Note: Exceptions raised by the sub-generator will be propagated to the main generator as well.

Python Generator as Decorator

Now that we have a solid understanding of Python generators, let’s explore an interesting aspect of generators: their ability to be used as decorators. Yes, you heard it right! Generators can play the role of decorators and add additional functionality to existing functions. It’s like giving a function a special power-up!

Syntax of Generator Decorators

To use a generator as a decorator, we follow a simple syntax. We define a generator function that takes in a function as an argument and yields a modified version of that function. Here’s an example to illustrate the syntax:

def generator_decorator(func):

def wrapper(*args, **kwargs):

# Perform pre-function call operations

# Yield modified function output

yield func(*args, **kwargs)

# Perform post-function call operations

return wrapper

@generator_decorator

def my_function():

# Function implementation

In this example, we define a generator function called generator_decorator that takes a func argument representing the function we want to decorate. Inside the generator function, we define a nested function called wrapper that wraps the original function. The wrapper function can perform operations before and after calling the original function, and it yields the modified output.

Example 1: Using Generator Decorator for Caching

One practical use case of generator decorators is implementing a caching mechanism to store and retrieve the results of expensive function calls. Let’s consider an example where we have a function that performs a computationally intensive operation and we want to cache its results to improve performance. We can achieve this using a generator decorator. Here’s an example:

def cache_results(func):

cache = {}

def wrapper(*args):

if args in cache:

yield cache[args]

else:

result = func(*args)

cache[args] = result

yield result

return wrapper

@cache_results

def compute_factorial(n):

if n == 0:

return 1

else:

return n * next(compute_factorial(n-1))

fact = compute_factorial(5)

print(fact)

print(next(fact))

In this example, we define a generator decorator called cache_results. Inside the decorator, we maintain a cache dictionary to store the results of function calls. The wrapper function checks if the arguments are already in the cache. If so, it yields the cached result. Otherwise, it computes the result using the original function, stores it in the cache, and yields the result. This way, subsequent calls with the same arguments will retrieve the result from the cache instead of recomputing it.

Output:

<generator object cache_results.<locals>.wrapper at 0x000001BF5A449A10>

120Thanks to caching, we can efficiently compute the factorial of large numbers that would be otherwise challenging to calculate. To demonstrate this, let’s put it to the test by finding the factorial of a big number that is still within the limits of the maximum recursion stack size. For instance, we can calculate the factorial of 300. Despite the significant computation involved, the cached results enable fast output generation.

<generator object cache_results.<locals>.wrapper at 0x0000017D0D8F9A10>

306057512216440636035370461297268629388588804173576999416776741259476533176716867465515291422477573349939147888701726368864263907759003154226842927906974559841225476930271954604008012215776252176854255965356903506788725264321896264299365204576448830388909753943489625436053225980776521270822437639449120128678675368305712293681943649956460498166450227716500185176546469340112226034729724066333258583506870150169794168850353752137554910289126407157154830282284937952636580145235233156936482233436799254594095276820608062232812387383880817049600000000000000000000000000000000000000000000000000000000000000000000000000Example 2:

I didn’t originally plan to provide another example of a generator decorator, but I couldn’t resist showcasing how versatile and fascinating generator decorators can be. In this second example, we’ll explore how generator decorators can serve as a retry mechanism, which can come in handy when dealing with potentially unreliable operations or network-related issues.

Let’s dive into the code:

import time

error = 0

def retry_decorator(max_retries=3, wait_time=1):

def decorator(generator_func):

def wrapper(*args, **kwargs):

for _ in range(max_retries + 1):

try:

yield from generator_func(*args, **kwargs)

break

except Exception as e:

print(f"Retrying after error: {e}")

global error

error += 1

time.sleep(wait_time)

return wrapper

return decorator

@retry_decorator(max_retries=5, wait_time=2)

def data_generator():

yield 1

yield 2

if error < 2:

raise Exception(f"Code[{error}] Error Occurred")

yield 3

yield 4

yield 5

gen = data_generator()

for i in gen:

print(i)Code Explanation

Let’s understand how this retry mechanism works:

- We define a

retry_decoratorfunction that takes two parameters:max_retriesandwait_time. This function returns a decorator. - The decorator itself is a function that takes the generator function as an argument. It returns a wrapper function.

- The wrapper function is where the magic happens. It uses a loop to attempt to execute the generator function multiple times, up to the specified

max_retries. - Inside the loop, the generator function is invoked using

yield fromto yield its values. If an exception occurs, we catch it and print an error message. We also increment theerrorvariable to keep track of the number of errors encountered. - After an exception, the wrapper function pauses execution for the specified

wait_timeusing thetime.sleep()function. - If the generator function completes without any exceptions, we break out of the loop and stop retrying.

- Finally, we apply the

retry_decoratorto thedata_generatorfunction using the@syntax. This decorates the generator function with the retry mechanism.

Output

When we run the code, we see the following output:

1

2

Retrying after error: Code[0] Error Occurred

1

2

Retrying after error: Code[1] Error Occurred

1

2

3

4

5As you can see, the data_generator function initially raises an exception twice (error < 2 condition), simulating an error occurring during data generation. However, thanks to the retry mechanism provided by the generator decorator, the generator function is retried multiple times until it successfully completes without any exceptions. This ensures the generator is resilient to errors and can continue providing the desired data.

Using generator decorators in this way allows us to enhance the functionality of generators by adding powerful retry capabilities. It’s just one example of how you can leverage the flexibility and versatility of generator decorators to handle various scenarios and improve the robustness of your code.

Feel free to experiment with different values for max_retries and wait_time to customize the behavior of the retry mechanism according to your specific requirements.

Uses of Generator Decorators

Generator decorators offer a wide range of possibilities for enhancing the behavior of functions. Some other common use cases include:

- Pre and Post-processing: Decorators can add validation or cleanup steps before and after yielding values, ensuring data integrity.

- Filtering Generator Output: Decorators can filter or transform the output of generators based on specific criteria, customizing the generated sequence.

- Profiling and Logging: Decorators can incorporate profiling, logging, or debugging capabilities into generators, aiding in performance analysis and troubleshooting.

Closing a Generator in Python

As we reach the end of our exploration into Python generators, it’s important to understand how to properly close a generator. Closing a generator is a crucial step to ensure efficient memory management and prevent any potential resource leaks.

To close a generator, we use the close() method, which is available on generator objects. When called, this method raises a GeneratorExit exception inside the generator function, allowing for any cleanup operations to be performed before the generator is fully closed.

Let’s consider an example to illustrate the closing process:

def number_generator(n):

try:

for i in range(n):

yield i

except GeneratorExit:

print("Generator closing...")

finally:

print("Cleanup operations here")

gen = number_generator(5)

for num in gen:

print(num)

if(num > 2):

gen.close()In the above code, we define a generator function number_generator() that yields numbers from 0 to n. Inside the function, we use a try-except block to catch the GeneratorExit exception. This allows us to perform any necessary cleanup operations, such as closing open file handles or releasing resources.

When we’re done using the generator, we call gen.close() to explicitly close it. This triggers the GeneratorExit exception, and the control flow enters the except block. We can include custom cleanup code within the finally block to ensure that all necessary resources are properly handled.

Output:

0

1

2

3

Generator closing...

Cleanup operations here📝 Note: Closing a generator is especially important when working with generators that rely on external resources or have side effects.

Fun Fact!

Generators, a powerful feature in Python, have an interesting origin story. Did you know that the concept of generators in Python was inspired by an influential language called Icon? Icon, a high-level programming language developed in the 1970s, introduced a unique construct known as “coroutines”. Coroutines were an innovative way to handle cooperative multitasking and enable efficient data processing.

Guido van Rossum, the creator of Python, found the concept of coroutines fascinating and sought to incorporate similar functionality into Python. As a result, he introduced generators in Python 2.2, which borrowed some of the fundamental ideas from coroutines in Icon. By combining the simplicity and ease of use of Python with the powerful coroutine concept, Python generators were born.

Generators quickly became a popular feature among Python developers due to their ability to handle large datasets efficiently and elegantly. They provide a convenient way to generate values on-the-fly, making them invaluable for tasks like streaming data processing, handling infinite sequences, and optimizing memory usage.

Python’s implementation of generators made the concept accessible to a broader audience, and it has since become a core feature of the language. Today, generators are widely used in various domains, including data analysis, web development, and scientific computing.

The next time you utilize a generator in Python to process data lazily or create an infinite sequence effortlessly, remember its roots in the cooperative multitasking of Icon.

So, do your friends know about this awesome fact? Share it with them now and impress them with your knowledge of Python generators and their fascinating origins!

Wrapping Up

In conclusion, Python generators offer a powerful and efficient approach to programming. By understanding their principles and techniques, you can enhance your code’s readability, performance, and memory efficiency.

Throughout this guide, we explored the basics of generators, including how they differ from regular functions and the role of the yield statement in generating values on-the-fly. We learned how to create generators using functions and expressions, unlocking their potential for handling large datasets and complex operations.

By utilizing generators, you can simplify your code, improve memory usage, and enhance performance. They are particularly useful in scenarios such as stream processing, scientific computations, and web scraping.

We encourage you to continue exploring and experimenting with generators in your own projects. As you delve deeper, you’ll discover even more innovative ways to leverage their capabilities.

Thank you for joining us on this journey through Python generators. Happy coding!

Frequently Asked Questions (FAQs)

A Python generator is a special type of function that can be paused and resumed during its execution. It generates a sequence of values using the yield statement instead of return. Generators are memory-efficient and allow for lazy evaluation, making them ideal for iterating over large datasets or generating values on-the-fly.

Yes, you can indeed send values into a generator using a special method called send(). This method allows for two-way communication between the caller and the generator function.

You can iterate over a generator in Python using a for loop. When you iterate over a generator, each iteration calls the generator function and retrieves the next yielded value until the generator is exhausted. Alternatively, you can use the next() function to manually retrieve the next value from a generator.

No, generators are not reusable in the traditional sense. Once a generator is exhausted, meaning it has yielded all its values, you cannot iterate over it again. However, you can create a new instance of the generator function to start a fresh iteration and obtain a new generator.

While generators and coroutines share similarities, they have distinct differences. Generators produce values when iterated over, while coroutines can consume values through the send() method. Coroutines allow for bidirectional communication and can be used for cooperative multitasking, whereas generators primarily focus on generating values.